Edge computing vs cloud computing: The choice between these two powerful computing paradigms often hinges on the specific needs of an application. While cloud computing relies on centralized data centers, edge computing processes data closer to its source, offering distinct advantages in terms of latency, bandwidth, and security. This comparison delves into the key differences between these approaches, exploring their strengths and weaknesses to help you determine the best fit for your projects.

This exploration will cover the core architectural differences, examining how latency and response times are affected, and analyzing data processing and storage capabilities. We’ll also discuss bandwidth requirements, security considerations, cost implications, application suitability, scalability, and deployment models. By the end, you’ll have a clear understanding of which computing model – edge or cloud – best suits your needs.

Defining Edge and Cloud Computing

Edge computing and cloud computing represent distinct approaches to data processing and storage, each with its own strengths and weaknesses. Understanding their fundamental differences is crucial for selecting the optimal solution for various applications. This section will define both concepts and compare their architectural underpinnings.

Edge computing processes data closer to its source, often at the network’s edge, rather than relying on a centralized cloud data center. This proximity allows for faster processing speeds, reduced latency, and improved bandwidth efficiency, especially beneficial for real-time applications like autonomous vehicles or industrial automation.

Edge Computing Definition

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data. This minimizes latency, improves bandwidth efficiency, and enhances real-time responsiveness. Examples include processing sensor data on a factory floor or analyzing video feeds from security cameras locally before transmission to a central server.

Cloud Computing Definition

Cloud computing delivers computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet (“the cloud”). Instead of owning and maintaining physical data centers and servers, users access these resources on demand from a cloud provider, paying only for what they use. This model offers scalability, flexibility, and cost-effectiveness.

Architectural Comparison of Edge and Cloud Computing

The core difference lies in the location of data processing. Cloud computing relies on centralized data centers, often geographically distant from the data sources. This architecture excels in managing large datasets and complex computations but can introduce latency issues for time-sensitive applications. In contrast, edge computing distributes processing power to the network edge, minimizing latency and improving responsiveness. This distributed nature necessitates robust network infrastructure and efficient data management strategies at each edge node.

Cloud computing is centralized; edge computing is decentralized.

Consider a smart city application: In a cloud-based approach, all sensor data (traffic, weather, pollution) would be transmitted to a central cloud server for processing and analysis. This introduces latency and potential bandwidth bottlenecks. An edge computing approach would process much of this data locally at smaller edge nodes located throughout the city, sending only aggregated or critical data to the cloud for further analysis. This significantly reduces latency and bandwidth requirements, enabling faster and more efficient responses to real-time events.

The debate between edge computing and cloud computing often centers around latency and data processing location. However, understanding the broader context is key; to truly grasp the implications, one must consider the Cloud Computing Trends Shaping the Future , which significantly influence the viability and application of both edge and cloud solutions. Ultimately, the best approach often involves a hybrid model leveraging the strengths of each.

Data Processing and Storage: Edge Computing Vs Cloud Computing

The fundamental difference between edge and cloud computing lies in where data is processed and stored. Cloud computing relies on centralized data centers for both, while edge computing pushes processing and storage closer to the data source, often at the network’s edge. This proximity significantly impacts latency, bandwidth requirements, and overall system efficiency. Let’s delve into the specifics of data processing and storage in both environments.

Edge and cloud computing employ different approaches to data processing, driven by their respective architectures. Cloud computing leverages powerful, centralized servers to handle large-scale data processing tasks. These servers are typically equipped with advanced processors and ample memory, allowing for complex computations and analysis. In contrast, edge computing utilizes smaller, less powerful devices located closer to the data source. These devices may be gateways, routers, or even specialized hardware like embedded systems. The processing power available at the edge is naturally constrained compared to a cloud data center, meaning the type of processing tasks suitable for the edge are generally less complex, but often more time-sensitive.

The key difference between edge computing and cloud computing lies in data processing location: edge processes data locally, while cloud computing relies on remote servers. Understanding the underlying infrastructure models is crucial, and a helpful resource for this is the excellent article on Comparison of IaaS PaaS SaaS A Comprehensive Overview , which clarifies the distinctions between IaaS, PaaS, and SaaS.

This understanding is essential when deciding whether edge or cloud solutions best fit specific application needs.

Edge Computing Data Storage Methods

Edge computing necessitates robust yet efficient data storage solutions tailored to its distributed nature. Several methods are employed, each with its strengths and weaknesses. These include local storage on the edge device itself (e.g., SSDs, embedded flash memory), distributed storage systems spanning multiple edge devices, and hybrid approaches that combine local storage with cloud-based backup and archiving. The choice depends on factors like data volume, latency requirements, and the desired level of redundancy and data security. For instance, a smart factory might use local storage for immediate processing needs, while regularly syncing crucial data to a cloud-based repository for long-term storage and analysis.

Cloud Computing Data Storage Methods

Cloud data storage is typically characterized by massive scale and centralized management. Cloud providers offer various storage options, including object storage (like Amazon S3), block storage (like AWS EBS), and file storage (like Google Cloud Storage). These services are designed for high availability, scalability, and durability. Data is replicated across multiple data centers to ensure redundancy and fault tolerance. Advanced features like data encryption, access control, and data lifecycle management are also commonly integrated. The scalability of cloud storage allows businesses to easily accommodate growing data volumes without significant upfront investment in infrastructure.

Data Locality: Advantages and Disadvantages

Data locality, the principle of keeping data close to where it’s processed, is a key differentiator between edge and cloud computing.

Edge Computing: Advantages of data locality include reduced latency, improved responsiveness, and enhanced privacy and security (since data doesn’t need to travel to a distant data center). Disadvantages include limited storage capacity on individual edge devices, potential for data silos (making data aggregation and analysis more challenging), and increased complexity in managing distributed storage systems.

Cloud Computing: Advantages of centralized storage lie in its scalability, ease of management, and high availability. Disadvantages include increased latency, higher bandwidth requirements for data transfer, and potential concerns about data sovereignty and security when data is stored in geographically distant locations.

Security Considerations

The shift towards edge computing introduces a new layer of complexity to data security. While cloud computing centralizes security management, edge computing distributes it across numerous geographically dispersed devices. This decentralized nature presents unique challenges and necessitates a multifaceted approach to safeguarding sensitive information. Effective security strategies must account for the diverse security profiles of edge devices and the potential for increased attack surfaces.

Edge and cloud computing employ different security strategies due to their inherent architectural differences. Cloud environments benefit from robust, centralized security infrastructure and dedicated security teams. Edge deployments, however, often rely on less sophisticated security measures due to resource constraints on individual devices and the difficulties in managing security updates across a large, distributed network. This disparity highlights the need for tailored security approaches specific to each environment.

Unique Security Challenges in Edge Computing Deployments

The distributed nature of edge computing introduces several unique security challenges. One major concern is the potential for compromised edge devices to become entry points for attacks. These devices, often located in less secure environments, are more vulnerable to physical tampering or malware infections. Furthermore, the limited processing power and storage capacity of many edge devices can hinder the implementation of comprehensive security measures. Network connectivity issues at edge locations can also complicate security management and incident response. Finally, ensuring consistent security patching and updates across a vast network of edge devices presents a significant logistical hurdle.

Comparison of Security Measures in Edge and Cloud Environments, Edge computing vs cloud computing

Cloud computing typically employs robust security measures such as data encryption at rest and in transit, access control lists, intrusion detection systems, and regular security audits. These measures are centralized, allowing for efficient management and monitoring. Edge computing, while also utilizing encryption and access controls, often relies on more lightweight security solutions due to resource limitations. Real-time threat detection and response might be more challenging at the edge, requiring a different approach than the centralized monitoring prevalent in cloud environments. For example, a cloud environment might utilize a sophisticated Security Information and Event Management (SIEM) system, while an edge deployment might rely on simpler local logging and anomaly detection.

Best Practices for Securing Data in Edge and Cloud Architectures

Effective data security in both edge and cloud architectures necessitates a multi-layered approach. For cloud environments, this includes implementing robust identity and access management (IAM) systems, regularly patching vulnerabilities, and utilizing encryption technologies. Data loss prevention (DLP) tools should be deployed to monitor and prevent sensitive data from leaving the controlled environment. Regular security audits and penetration testing are crucial to identify and mitigate potential weaknesses. For edge deployments, securing physical access to devices is paramount, along with implementing secure boot processes to prevent unauthorized software execution. Remote device management capabilities are crucial for efficient patching and configuration updates. Finally, a robust incident response plan is essential for both environments to minimize the impact of any security breaches. The plan should Artikel procedures for detection, containment, eradication, recovery, and post-incident analysis. Regular security training for personnel handling data in both edge and cloud environments is also a vital aspect of a comprehensive security strategy.

Application Suitability

Choosing between edge and cloud computing hinges significantly on the specific application’s requirements. The optimal choice depends on factors like latency sensitivity, bandwidth availability, data volume, security needs, and cost considerations. Understanding these factors allows for informed decision-making, leading to efficient and effective deployment.

Applications best suited for edge computing and cloud computing possess distinct characteristics. The key differentiator lies in the need for immediate processing and low latency versus the tolerance for higher latency and the potential for large-scale data processing.

Applications Best Suited for Edge Computing

Edge computing excels when immediate processing is critical. Low latency is paramount for real-time applications where the delay introduced by sending data to the cloud and receiving a response is unacceptable. This necessitates processing data locally at the edge, closer to the source.

- Autonomous Vehicles: Real-time object detection and navigation require instantaneous processing of sensor data. Sending this data to the cloud would introduce unacceptable delays, potentially resulting in accidents.

- Industrial IoT (IIoT): In manufacturing, immediate feedback loops are essential for process optimization and anomaly detection. Edge computing enables real-time monitoring and control of machinery, leading to improved efficiency and reduced downtime.

- Video Surveillance: Analyzing video streams in real-time for security purposes requires low latency. Edge computing allows for immediate threat detection and response, without relying on cloud-based processing.

- Smart City Applications: Real-time traffic management, environmental monitoring, and smart lighting systems benefit from the low latency and reduced bandwidth consumption provided by edge computing.

Applications Best Suited for Cloud Computing

Cloud computing is ideal for applications that can tolerate higher latency and benefit from the scalability and resources offered by large data centers. Data volume and computational intensity are key factors favoring cloud-based solutions.

- Big Data Analytics: Processing massive datasets for insights requires the computational power and storage capacity of cloud infrastructure. Cloud-based analytics platforms offer the necessary resources for complex data analysis.

- Machine Learning Model Training: Training large machine learning models often requires significant computational resources. Cloud computing provides the scalability and infrastructure needed for efficient model training.

- Data Warehousing and Business Intelligence: Storing and analyzing large volumes of business data for reporting and decision-making is best handled by cloud-based data warehouses.

- Software as a Service (SaaS): Cloud-based SaaS applications leverage the scalability and accessibility of cloud infrastructure to deliver software services to a wide range of users.

Criteria for Selecting the Appropriate Computing Model

The choice between edge and cloud computing depends on a careful evaluation of several factors. A balanced assessment is crucial for optimizing performance, cost, and security.

- Latency Requirements: Applications requiring real-time processing and low latency should be deployed at the edge. Those with higher latency tolerance can leverage cloud computing.

- Bandwidth Availability: Limited bandwidth necessitates edge computing to minimize data transfer. Sufficient bandwidth allows for cloud-based solutions.

- Data Volume and Processing Needs: Applications generating large volumes of data or requiring extensive processing may benefit from cloud computing’s scalability.

- Security Considerations: Sensitive data may necessitate edge computing to minimize exposure during transmission. Cloud security measures must be considered when choosing cloud-based solutions.

- Cost Analysis: Edge computing can involve higher upfront costs for infrastructure deployment. Cloud computing offers a pay-as-you-go model, but costs can escalate with increased usage.

Deployment Models and Architectures

Deployment models and architectures significantly influence the effectiveness and efficiency of both edge and cloud computing. Understanding these differences is crucial for selecting the optimal solution for specific applications and business needs. The choice depends on factors such as latency requirements, data volume, security concerns, and budget.

Edge Computing Deployment Models

Edge computing deployments vary greatly depending on the specific needs of an application. The location and scale of the edge infrastructure are key differentiators.

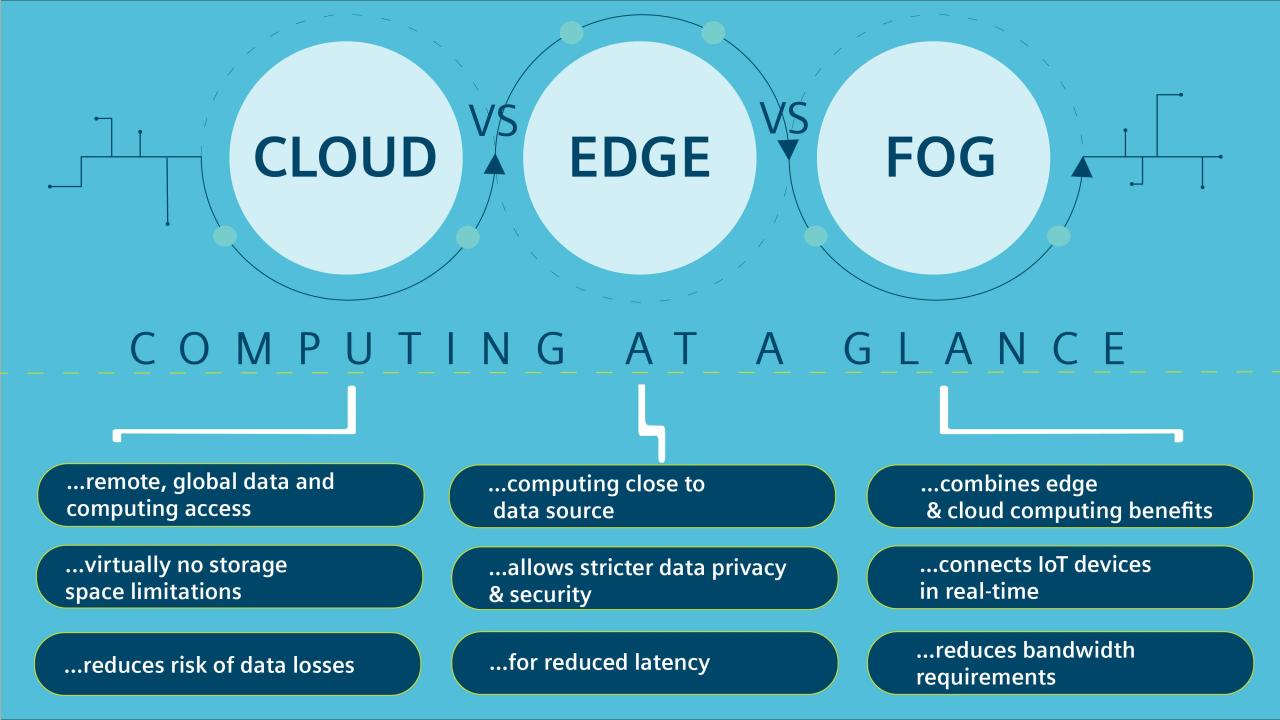

- Fog Computing: This model extends cloud computing capabilities closer to the data source, typically within a local network or geographical area. Fog nodes are distributed strategically, acting as intermediaries between the edge devices and the cloud. This architecture allows for processing and storage closer to the source, reducing latency and bandwidth consumption. An example would be a network of sensors in a smart city, processing data locally before sending aggregated information to the cloud for further analysis.

- Micro-datacenters: These are small-scale data centers deployed at the edge, often in remote locations or within individual businesses. They offer increased control and security over data, as well as reduced latency. A retail chain might deploy micro-datacenters in each store to process point-of-sale transactions locally and improve responsiveness.

- Mobile Edge Computing (MEC): This model focuses on deploying computing resources at the edge of mobile networks, closer to mobile devices. This is especially beneficial for applications requiring low latency, such as augmented reality or real-time gaming. An example would be a game server located within a cellular network to minimize lag for mobile gamers.

Cloud Computing Architectures

Cloud computing offers a range of deployment models, each with its own advantages and disadvantages.

- Public Cloud: This model involves using cloud services provided by a third-party provider, such as AWS, Azure, or Google Cloud. Resources are shared among multiple users, leading to cost-effectiveness but potentially reduced control and security. A startup company might choose a public cloud for its scalability and affordability.

- Private Cloud: This model involves deploying cloud resources within an organization’s own infrastructure. This offers greater control and security but requires significant investment in infrastructure and management. A large financial institution might opt for a private cloud to maintain strict compliance requirements.

- Hybrid Cloud: This model combines both public and private cloud resources, allowing organizations to leverage the benefits of both. Sensitive data can be stored in a private cloud, while less sensitive data can be processed in a public cloud. A large enterprise might use a hybrid cloud to balance cost, security, and scalability needs.

Comparison of Edge and Cloud Deployment Models

The choice between edge and cloud computing, or a hybrid approach, depends heavily on the application’s specific requirements. Edge computing excels in scenarios demanding ultra-low latency and real-time processing, while cloud computing provides scalability and cost-effectiveness for larger datasets and less time-sensitive tasks. A hybrid approach often offers the best balance, leveraging the strengths of both. For example, an autonomous vehicle might process sensor data at the edge for immediate reactions, while sending aggregated data to the cloud for long-term analysis and machine learning.

Ultimately, the decision between edge and cloud computing depends on a careful evaluation of your application’s specific requirements. While cloud computing excels in scalability and centralized management, edge computing shines in scenarios demanding low latency, enhanced security, and localized data processing. Understanding the trade-offs between these approaches is crucial for making informed decisions that optimize performance, cost-effectiveness, and security.