Future of generative AI tools promises a transformative era across industries. From revolutionizing healthcare with personalized treatments to unleashing creative potential in art, music, and literature, generative AI is poised to reshape our world. This exploration delves into the capabilities, limitations, and ethical considerations surrounding this rapidly evolving technology, offering a glimpse into its potential impact on society and the economy.

We will examine the advancements in underlying technologies, the crucial role of data and training, and the imperative of ensuring accessibility and responsible development. The economic implications, security and privacy concerns, and future research directions will also be carefully considered, painting a comprehensive picture of this powerful technology’s trajectory.

Generative AI Tool Capabilities

Generative AI tools are rapidly evolving, exhibiting impressive capabilities while simultaneously grappling with significant limitations. Understanding both their strengths and weaknesses is crucial for navigating their current applications and anticipating future advancements. This section will explore the current state of generative AI, its limitations, and potential future developments.

Current Capabilities of Leading Generative AI Tools

Leading generative AI tools, such as GPT-3, DALL-E 2, and Stable Diffusion, demonstrate remarkable abilities in various domains. GPT-3 excels at generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. DALL-E 2 and Stable Diffusion generate high-resolution images and art from text descriptions, showcasing impressive creativity and control over visual details. These tools leverage deep learning architectures, specifically large language models (LLMs) and diffusion models, to achieve these feats. They learn patterns and relationships from massive datasets, enabling them to generate novel content that resembles the data they were trained on.

Limitations of Existing Generative AI Tools

Despite their capabilities, current generative AI tools face several limitations. One major constraint is their reliance on the data they are trained on. This can lead to biases reflected in the generated output, perpetuating societal prejudices present in the training data. Furthermore, these models often struggle with factual accuracy, sometimes generating plausible-sounding but incorrect information – a phenomenon known as “hallucination.” Another limitation is the computational resources required for training and running these models, limiting accessibility for researchers and developers with limited resources. Finally, controlling the output and ensuring desired properties can be challenging, requiring careful prompting and parameter tuning. For instance, while DALL-E 2 can create detailed images, accurately conveying subtle nuances in artistic style or specific object characteristics can still be difficult.

Potential Advancements in the Next 5 Years

Over the next five years, we can anticipate significant advancements in generative AI. Improved algorithms and increased computational power will likely lead to models capable of generating even more realistic and coherent content. We can expect to see advancements in controlling the style and content of generated outputs, allowing for finer-grained manipulation and customization. Research into mitigating biases and improving factual accuracy is ongoing, and we can anticipate progress in addressing these crucial issues. Furthermore, the development of more efficient models will broaden accessibility, enabling wider adoption across various fields. For example, we might see more personalized educational tools using generative AI to tailor learning experiences to individual student needs, or more sophisticated AI-powered design tools enabling rapid prototyping and iteration in architecture and product design.

Comparison of Generative AI Architectures

Different generative AI architectures possess unique strengths and weaknesses. Large language models (LLMs), like those powering GPT-3, excel at text generation but are computationally expensive and can struggle with nuanced reasoning. Diffusion models, such as those used in DALL-E 2 and Stable Diffusion, are adept at generating high-quality images but may require extensive training data and significant computational resources. Other architectures, like GANs (Generative Adversarial Networks), offer alternative approaches but may suffer from instability during training. The choice of architecture often depends on the specific application and the trade-off between computational cost, output quality, and ease of training. For instance, while LLMs might be suitable for chatbot applications, diffusion models are better suited for image generation tasks. The future likely involves hybrid approaches, combining the strengths of different architectures to overcome individual limitations.

Ethical Considerations and Societal Impacts: Future Of Generative AI Tools

The rapid advancement of generative AI presents a complex interplay of opportunities and challenges. While offering immense potential across various sectors, its deployment necessitates a careful consideration of the ethical implications and potential societal impacts. Failing to address these concerns could lead to unforeseen and potentially harmful consequences.

Potential Biases in Generative AI Algorithms and Mitigation Strategies

Generative AI models are trained on vast datasets, and if these datasets reflect existing societal biases (e.g., gender, racial, or socioeconomic), the AI system will likely perpetuate and even amplify these biases in its outputs. For example, a language model trained on a dataset with predominantly male voices might generate text that disproportionately features male characters or perspectives. Mitigation strategies involve carefully curating training datasets to ensure representation and balance, employing bias detection and mitigation algorithms during model development, and implementing rigorous testing and evaluation procedures to identify and address biases in the AI’s output. Furthermore, ongoing monitoring and adaptation of the model are crucial to account for evolving biases in data.

Implications for Intellectual Property Rights

The use of generative AI raises significant questions regarding intellectual property rights. When an AI generates content, such as an image, piece of music, or text, the question of ownership becomes complex. Is the owner the person who developed the AI, the user who prompted the AI, or the AI itself? Existing copyright laws are not fully equipped to handle this novel situation, leading to potential legal disputes and uncertainty for creators and users alike. Clearer legal frameworks are needed to define ownership and licensing rights for AI-generated content, balancing the interests of developers, users, and the potential for public access to creative works. For instance, a debate is emerging around whether AI-generated art should be eligible for copyright protection.

Potential for Misuse and the Need for Responsible Development, Future of generative AI tools

Generative AI’s ability to create realistic and convincing content makes it susceptible to misuse. Deepfakes, for example, can be used to create fraudulent videos or audio recordings, potentially damaging reputations or influencing elections. Similarly, AI-generated text could be used to spread misinformation or propaganda. Responsible development involves implementing safeguards to prevent misuse, such as watermarking AI-generated content, developing techniques to detect deepfakes, and promoting media literacy to help users identify AI-generated content. Furthermore, ethical guidelines and industry standards are necessary to ensure that the development and deployment of generative AI align with societal values and minimize potential harm. The development of robust detection mechanisms for AI-generated content is paramount.

Impact on Employment and the Workforce

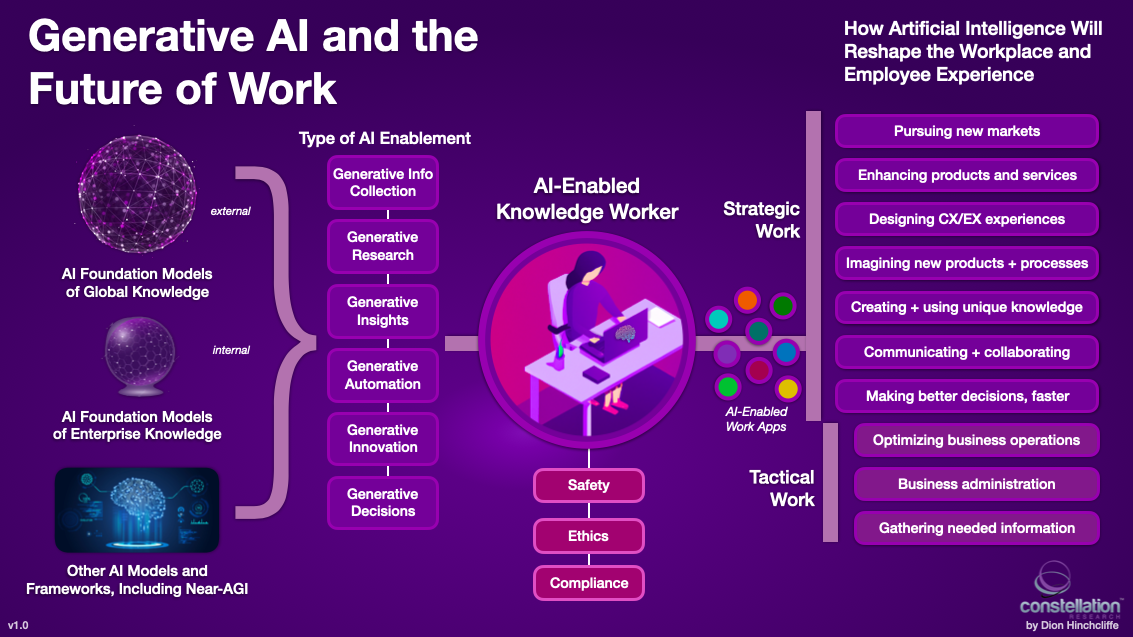

The automation potential of generative AI raises concerns about its impact on employment. Some jobs may be automated entirely, while others may be transformed, requiring workers to adapt to new skills and roles. This transition may lead to job displacement in certain sectors, requiring proactive measures such as retraining and upskilling programs to prepare the workforce for the changing job market. However, generative AI also has the potential to create new jobs and industries, particularly in areas such as AI development, maintenance, and ethical oversight. The net impact on employment is uncertain and will likely vary across sectors and skill levels. For instance, while some routine tasks in customer service may be automated, new roles focusing on AI system management and human-AI collaboration may emerge.

The future of generative AI tools is undeniably bright, brimming with potential to solve complex problems and unlock unprecedented opportunities. However, responsible development, ethical considerations, and robust security measures are paramount to ensure its beneficial integration into society. Navigating the challenges and harnessing the power of this technology will require a collaborative effort across industries, research institutions, and policymakers, paving the way for a future where AI enhances human capabilities and drives progress for all.

The future of generative AI tools hinges on processing power; current limitations in speed and complexity could be significantly overcome by leveraging the immense potential of quantum computing. Access to this power is becoming increasingly available through cloud-based services, such as those detailed in this informative article on Quantum computing in the cloud. This accessibility will likely accelerate the development of far more sophisticated and efficient generative AI models in the coming years.

The future of generative AI tools hinges on efficient resource management, and this is where the concept of sustainable computing becomes crucial. The increasing computational demands of these tools necessitate a shift towards environmentally conscious practices, which is why exploring initiatives like Green cloud computing is vital for the long-term viability and responsible development of generative AI.

Ultimately, the future of these powerful tools depends on our ability to power them sustainably.